meina222

Donator-

Posts

449 -

Joined

-

Last visited

-

Days Won

5

Content Type

Profiles

Forums

Events

Downloads

Everything posted by meina222

-

Let me test it 1st tonight. DIdn't get a chance to install on Proxmox yet and busy at work till this evening US ET. The .deb files are about 70MB un-archived. I can try and archive them and upload them to github. Or is there a space here? I did install 5.8 on my Ubuntu and working from it now - seems great - fan speed control is finally in sync w BIOS profiles as the only obvious (audible) improvement. Edit: I tried uploading here the tar.gz of .deb files. It comes to about 76MB. The board here has limit of 48MB.

-

I succeeded in building pve-edge kernel against Linux 5.8 and latest zfs mainline (similar to an OpenCore daily build - note that this is NOT AN OFFICIAL ZFS release). I used latest gcc 10.2. So truly "bleeding edge". Expect to "bleed' tomorrow testing it. Unfortunately my VM pool is ZFS software raid so I will have to test it on a different storage as I'm not inclined to lose my partitions. But if you don't use ZFS, this should "just work" (I hope). Here's what I roughly did. This assumes you're familiar with git and git submodules and have an environment where you can build the linux kernel. Pasting my email to Fabian that I just fired off. 1. Created new git submodules named "linux" and "openzfs/upstream" alongside existing "ubuntu-mainline" and "zfsonlinux" modules SHA of linux is bcf876870b95592b52519ed4aafcf9d95999bc9c (Torvald's 5.8 tag) SHA of openzfs/upstream is f1de1600d132666d03b3b73a2ab62695a0f60ead which happens to be the lates of origin/master of https://github.com/openzfs/zfs 2. Copied "debian" and "debian.master" from existing ubuntu-mainline submodule to "linux" submodule Copied "debian" and Makefile from existing "zfsonlinux" submodule to the "openzfs" folder 3. Changed the pve-edge-kernel root Makefile to refer to "linux" and "openzfs" folders instead of "ubuntu-mainline" and "zfsonlinux" 4. Fired off make. 2 patches failed immediately ("fuzz") in openzfs/debian/patches (copied from zfsonlinux/debian/patches) 0002-always-load-ZFS-module-on-boot.patch 0007-Use-installed-python3.patch 0002 was a trivial patch so I manually uncommented #zfs in etc/modules-load.d/zfs.conf of zfs root (upstream folder) which should enable proxmox to load zfs automatically I believe I didn't bother with 0007-Use-installed-python3.patch After removing the 2 patches from the series the build succeeded. I specified zen2 and ended up with the following files: linux-tools-5.8_5.7.8-1_amd64.deb pve-edge-headers-5.8.0-1-zen2_5.7.8-1_amd64.deb pve-edge-kernel-5.8.0-1-zen2_5.7.8-1_amd64.deb pve-kernel-libc-dev_5.7.8-1_amd64.deb libc-dev didn't update, I think this is specified somewhere in root debian, but I think I might try to fire it off tomorrow regardless w just kernel and kernel headers + tools. I will probably avoid using ZFS in this test. If anyone is interested in reproducing my results, I can post instructions on how to prepare your environment to build the kernel. You can do it on Ubuntu (as I did) or Proxmox itself. The biggest pain/obstacle is to install all toolchain packages needed to build the kernel.

-

@fabiosun - zfs support is integral part of proxmox - it will be very hard to try and decouple it - zfs is part of the options to create host storage, and you can specify it in the gui itself. What I am hoping to do is build zfs from latest mainline and have it compile, but not recommend setting up zfs host storage pools as I am not sure one can guarantee full data integrity. FYI OC 0.6.0 was officially released today https://github.com/acidanthera/OpenCorePkg/releases

-

Small update on trying to get an early build for Proxmox on Linux 5.8. My initial hope was that 5.8 would finally address AMD reset issues, as it has a host of AMD related improvements (both CPU and graphics driver) but I am not as optimistic about the reset issue now. Nonetheless, it's a huge release and many people will test it on bare metal Ubuntu's and other distros, but it will take quite a while to make it to Proxmox. It would be nice to see what improvements or issues it brings, especially with pass-through and host power management. Fabian confirmed to me he's not able to build pve-edge-kernel against it yet. Same issue I had when I tried to compile it, but pointed that someone ported ZFS to 5.8 already. When I find time I will try to follow his lead. If anyone else is interested in trying this, PM me. Thank you for your interest! I have tried to build a 5.8-rc4 kernel but stumbled upon the same issue you are having. I made a quick attempt to backport some of the changes from upstream ZFS, which did not succeed however. I have been rather conservative with making changes to ZFS since (1) I am not that familiar with the code base and (2) I don‘t use ZFS myself (thus I cannot test it). In the meantime I haven’t yet had time to look at the latest releases due to holidays. Though, I saw that Nix managed to build ZFS for Linux 5.8-rc7, so I’ll have a look at that soon. Kind regards, Fabian Mastenbroek On 3 Aug 2020, at 16:26, [redacted] wrote: Hi Fabian, Sorry for using email, not sure if this is the best way to communicate with you, but figured to try as it is listed in your github. With Linux 5.8 released I tried to build https://github.com/fabianishere/pve-edge-kernel by essentially replacing the ubuntu mainline submodule with Linux mainline cut by Torvalds yesterday. All good with the kernel build but ZFS build failed. There is some sort of incompatibility with 5.8 - I got compile error possibly related to this ZFS entry - https://github.com/openzfs/zfs/pull/10422 Any plans to soon build pve-edge-kernel with 5.8 or do you plan to wait for ZFS to make a 5.8 compatible release? Have you tried to build pve against 5.8 release candidates and would that work even if ZFS is fixed? I am tempted to try and backport ZFS changes from ZFS mainline to make the build succeed, but not sure if there are other surprises that await me after that. Cheers and thanks for sharing that work on github, p.s. I've redacted emails from this thread so the don't get picked by spam crawlers.

-

@fabiosun is right. The SSDT and CPU as host have nothing to do with each other. This is all related to support in the bootloader - OC I suspect (and more specifically what the AMD OSX community is doing to make AMD CPUS work in macOS). The VM can boot only with OC + Lilu, no extra kexts and no SSDT (please, anyone, correct me if wrong)

-

>RE Ethernet Port: Currently macos sees the Aquantic Ethernet port, which leads me to a question. If Proxmox is connected to one port, would the macos need a second ethernet connection via the Aquantic port? @tsongz - the way I do this is as follows: I have 2 Ethernets. I set up a VM Bridge with the gateway/router using 1 Ethernet for the host and pass the 2nd Ethernet to the VM (Catalina). That way both the host and the guest access the internet. When I set up Big Sur VM2 I wanted to use a different ROM / Mac address than Catalina so I swapped the Ethernets. That unfortunately meant that while Big Sur is up, the host loses the connection (but Big Sur still has it) as the gateway is only associated with 1 of the ethernets. The reason I did this is because I did not want to have Apple system record same ROM form 2 different Mac's trying to activate - likely won't work but didn't try it. This is only an issue with 2 Mac VMS though. You do need ethernet to activate iMessage, but once activated you can go back to vmx or wireless if you need your Ethernet back - I choose to leave it.

-

I also used Nick Sherlock as a start, then after doing research realized that OC and the Hackintosh community had moved beyond - but my 1st VM 2 months ago was from Nick's article. But the person who popularized Threadripper + MacOS was @fabiosun. It was his post (I wasn't registered at the time) that I saw in Google that hinted this was a thing.

-

Today Linus Torvalds released Linux Kernel 5.8. I downloaded the git submodule and try to link it to pve-edge-kernel and build it. The 5.8 kernel built just fine, but ZFS build failed as the May 8.4 release used in Proxmox / pve-edge is not compatible with 5.8. The failure I encountered is https://github.com/openzfs/zfs/pull/10422 Unfortunately Torvalds is not a fan of ZFS, so he could care less about breaking it. We may have to wait for a ZFS release to be cut from the openzfs project. I can try merging the current ZFS master branch and rebuild Promox with that from https://github.com/fabianishere/pve-edge-kernel, but that's a dicey approach - on the other hand if you (unlike me) don't use ZFS, you probably won't care. I'll give it a shot and keep you posted. Might get in touch w Fabian too, to see if we can cut experimental ZFS, but I suspect they will wait for an official release from openzfs. 5.8 is a huge release and I am looking forward to a 5.8 kernel Hypervisor in the coming weeks. Edit: News release. Linus Torvalds now owns a Threadripper 3970x so lots of AMD love coming to Linux, only some of which is mentioned briefly here ttps://www.theregister.com/2020/08/03/linux_5_8_released/

-

@tsongz: A few remarks to make you get where you want to be: Bluetooth Save yourself the trouble with AX200 and get either a MacOS native PCIE x1 card or a Bluetooth USB dongle with a Broadcom chip supported by macOS. For example I have verified the following to work in a Proxmox VM (including my MacOS keyboard and trackpad, iPhone pairing, AirDrop, Continuity etc), which as a bonus includes native WiFi: https://www.amazon.com/gp/product/B082X8MBMD/ If you don't have a free PCIE slot (my motherboard has a x1 slot so I don't have to use a full slot but the ARock may not have it) use a dongle: https://www.amazon.com/GMYLE-Bluetooth-Dongle-V4-0-Dual/dp/B007MKMJGO/ ⚠️ Make sure to disable the AX200 1st in MacOS by running 'sudo nvram bluetoothHostControllerSwitchBehavior=never' or else the AX200 will get picked up and show up as Ericsson 00-00-00-00-00-00 device. When you restart the BCM chip should get picked up. Big Sur and CPU Host works now. I would suggest to use with a later build OC and Lilu. Start with the minimum kexts - my Big Sur only works with Lilu and even if there are no power savings on the 5700XT I am not bothered by it. You would need USB kext to use keyboard in VM mode but that's about all to start booting. Add Whatevergreen and other stuff as you need. For example my BS VM config only has this for CPU: args: -device isa-applesmc,osk="[redacted apple haiku - fill yourself]" -smbios type=2 -device usb-kbd,bus=ehci.0,port=2 -cpu host,+invtsc,vendor=GenuineIntel You will get significant performance boost from that. Since you mentioned Pavo - start with this config.plist and add kexts as you need. It's barebones and it actually works with Big Sur. https://github.com/Pavo-IM/Proxintosh/tree/master/Big_Sir To get iMessage to work, ditch the vmx adapter and pass through one of the Ethernets. I have Intel I210 which MacOS recognizes - you need to ensure it's designated as 'built-in' - after you pass it through check the PCIE id in macOS and add it to the config.plist as 'built-in' and you need to add the ROM / Mac address in the config.plist as described in https://dortania.github.io/OpenCore-Post-Install/universal/iservices.html#generate-a-new-serial. Use your VGA VM mode to do installs/upgrades/tests to avoid the reset bug, then when satisfied, get the graphics card in and enjoy.

-

@fabiosun, thanks for creating this thread. I am interested in this topic too. Before I study this, which may take me a while, I have a couple of questions: 1. If you flash the Titan Ridge card with a macOS compatible firmware, does it render it useless in Linux? I don't have Windows, so I'm less concerned about it, but I certainly don't want to lose host support. 2. As a simpler goal to getting macOS VM to use TB3 devices - as TB3 devices are supposed to act like a PCIE devices - has anyone successfully passed through a TB3 device connected to the card without passing the TB3 controller itself? I don't have an external GPU or TB3 disk to try yet, but as all my PCIE slots are taken, future expansions would have to inevitably happen via TB3 or USB. Thanks.

- 145 replies

-

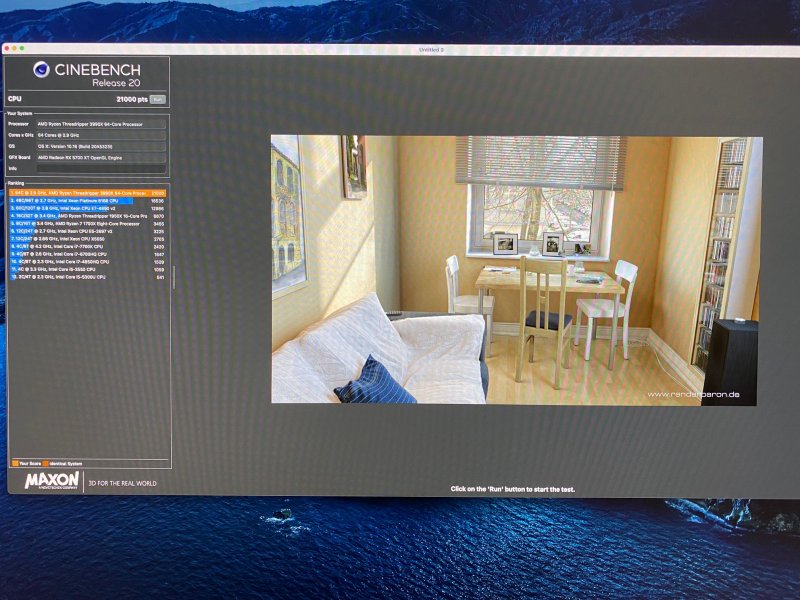

While on the topic of benchmarks I decided to see if there is any effect from "pinning" the host CPU threads given to the VMs. "Isolation" or "pinning" is something Linux supports - the full understanding of this term requires knowing about NUMA and OS scheduling but essentially it means that once a CPU "resource" such as a logical core is given to a process (the VM) by the host, it cannot be "taken away". This would be mostly of importance if you run 2 VMs and want maximum performance on both - the host won't be able to bounce threads between the two. Anyways, I was curious if the equivalent of "pinning" would benefit benchmarks. In Proxmox "pinning" is achieved via "tasksets". Here's how to enable it as an example of my Big Sur VM id 101 1. Start VM 2. "Pin" your CPUs to the started VM. In my case I have CPU id's 0-63 Run this command (change CPU range and VM id according to your setup) taskset --cpu-list --all-tasks --pid 0-63 "$(< /run/qemu-server/101.pid)" Edit: What this did for my 64C/128T CPU is to essentially fix the mac OS VM threads to run on 0-63 and never be scheduled on 64-127 of the logical cores. This benefit may only be visible in 3970x if you run 32 cores in macOS. In that case you would pin 0-31 and see benefit but I am not sure this would work if you already give macOS all of your 64 logical cores. This is an example of how the extra cores of the 3990x can help without trying to run VM arg -smp 128 for macOS, which doesn't seem to be possible. For more info on how to hook this to your VM on every start: https://forum.proxmox.com/threads/cpu-pinning.67805/ Now I run Geekbench again. Multicore score went up by more than 1000 points. I believe this is more than just variance from about 28000 to 29500. Single core didn't change. https://browser.geekbench.com/v5/cpu/3142197 Cinebench benefited a bit less. From 21000 to 21100. Of course the increases in both cases are relatively minor and there is still a chance this could be variance, but if you care about benchmarks and you're like me who doesn't want to kill the power line by overclocking, it's a nice trick to get extra 2-3%. The benefit would be much bigger if you run X VMs and want to "isolate" one from the other. Then you can split the cores in X task sets and give them to each VM.

-

CPU Cinebench attached as requested (Big Sur beta 3 on pve-kernel 5.44-2). ~21000 give or add a 100 depending on run

-

@fabiosun, @iGPU - I see you guys are having some TB3 fun. I have this card on my motherboard (Designare TRX40) installed in slot 2. I never tried to pass it through to one of VMs, as I don't have a device yet that uses it (besides my TB3 monitor in Linux and charging, and that works just fine). I am hoping that if I get a PCIE external GPU, then I can pass that through. Flashing the eprom and trying to pass the entire TB3 seems like a very complicated exercise. Hope it works. Let me know if I can help, but I don't have eprom flashing device at the moment.

- 145 replies

-

@fabiosun - I finally managed to fix BigSur Beta 3 to work on my VM. Latest OC/Lilu versions indeed support passing CPU as host. Alas, 64 core 1 socket is the max. The booter hangs very early if you try to pass 128. I played with the -v parameter in OC and the release build shows little to no useful logging that says why - it is just a halt very early in the process. One probably needs OC DEBUG to find out more. I think it's safe to assume nothing changed in that regard since Catalina for VM world. The 64 core 3990x benchmark Geekbench on Big Sur beta3 / pve-5.44.2 on MacPro7,1 looks even better than Catalina, especially multicore. Pleased with Beta3 despite 1 crash/freeze involving plugging and unplugging USB devices. https://browser.geekbench.com/v5/cpu/3136798

-

With some delay due to being occupied lately. Point taken. Added lots of info that may help others who look at similar hardware. I don't think it's part of my signature as it is too much text, but hopefully if you click or hover on the profile, the full setup is visible.

-

I will try the link you suggested and see if I can enable the logs as I ran release/no log OC and didn't see the debug messages post on-screen - just the stuck Apple logo. Also, I realized my experiment was a bit tainted as I used my Catalina VM to download and create Big Sur .iso disk image and then install it. So I was already running the 64 core Catalina VM, when launching the 64 core Big Sur via the web console. Should have booted Big Sur w the Catalina VM off. I guess what that means is that you can easily run 2 64 core Mac VMs on 3990x, but not sure if that's of any practical interest other than the convenience of testing multiple hacks without shutting your primary Mac VM.

-

Also, as I saw people referring to this issue in a few posts. The OC version that worked from the link above, does not indeed allow for cpu host on a fresh install. Do later OC versions resolve this?

-

Thanks. I'll check the many pages of posts on these threads. Maybe I can find tips to fix sleep, which is the last remaining issue to tackle. I tried to install Big Sur, but failed several times with newer OpenCores from daily builds. Does anyone have a EFI folder and kexts compatible with some later OC pre-release from the past few days? I then found https://www.nicksherlock.com/2020/06/installing-macos-big-sur-beta-on-proxmox/, which actually worked. I'll have to debug latest OC builds to see what changed. On CPU topology with the 3990x. Seems a power of 2 with at most 64 boots fine on Penryn, but any other topology (non power of 2 or more than 64 e.g. 128) would not. I tried Penryn 28 (no go), 32, 64 (fine), 128 (no go) all 1 socket. Last I tried 2 sockets, 64 each - no go. So 64 still seems to be the limit.

-

One interesting thing I uncovered today - maybe known to others in this forum. I wanted to test my Carbon Copy Cloner Sabrenet partition by booting from it, and couldn't figure how to boot straight from the NVME, which I passthrough as a PCI controller, without having to emulate it as a SCSI or IDE. Turns out (at least in Proxmox 6.2) - if you delete the entries of your SATA and EFI disks in the VM .conf, then your VM would boot straight from the NVME (assuming is is passed through as PCI). Credit: https://forum.proxmox.com/threads/cannot-edit-boot-order-with-nvme-drive.49179/. (last post) p.s. As CCC doesn't copy the MacOS main disk EFI by default, you need to do that manually once only, when happy with your OpenCore config, else it won't boot of course. OC crashes badly if your try to use the NVME as a Time Machine or CCC destination and forget to fix the EFI. Then the only way to boot is to remove the NVME PCI, but that defeats the purpose of using it as a backup, so don't forget this step.

-

I wasn't the only one with issues getting access to Big Sur by enrolling via website. After running: sudo /System/Library/PrivateFrameworks/Seeding.framework/Versions/A/Resources/seedutil sudo /System/Library/PrivateFrameworks/Seeding.framework/Versions/A/Resources/seedutil unenroll sudo /System/Library/PrivateFrameworks/Seeding.framework/Versions/A/Resources/seedutil enroll DeveloperSeed I can now download the update. I will try to install it tonight. Credit: https://mrmacintosh.com/big-sur-beta-not-showing-up-in-software-update-troubleshooting/ @fabiosun - thank you for the tip. I managed to fix it with the above steps before I saw your post. I will check on the tools you recommend. @Driftwood: My setup is Mobo GB sTRX40 Designare BIOS 4c 3990X 256G Ram at 3200MHZ PCIE1x16: Asus Stock 5700XT (no longer made, got for MacOS compatibility, unfortunately suffers from reset) PCIE2x8: Titan Ridge TB (packed w mobo, currently unused other than for charging, keeping so I could passthrough eGPUs in the future as all my other slots are populated) PCIE3 (4x4x4x4): Aorus Expander Card w x4 1G XPG Gammix PCIE4 NVMEs (zfs software RAID10 - this is the VMs storage pool including MacOS VM boot and installation data) PCIE4x8 @1: Old Nvidia 240GT I use for host. GB sTRX40 rev 1.0 mobos don't like this slot if all others are populated - seems to be either a lane sharing or BIOS constraint but since I only need a host GPU, it's OK. 2 Sabrenets PCIE4 1TB - One used as passthrough to MacOS - currently a destination of Carbon Copy Cloner and extra storage, the other is my Ubuntu 20.04 boot drive PCIE1x1: https://www.amazon.com/gp/product/B082X8MBMD/ (used as my wireless and bluetooth card as it comes w Apple firmware and supports all features such as air drop, continuity etc. out of the box). No overclocking besides memory 1 onboard Ethernet passed to VMs (including MacOS) and 1 is used by host The GB sTRX40 Designare is a tricky motherboard to make all the above work due to some PCIE lane issues - it's very sensitive to PCIE slot configuration, but the extra value from the expansion card and the TB make it worthwhile as everything else is quite stable once you figure its quirks. The only issues I have with this setup is: AMD reset. No existing patches would work for MacOS, though the power patch should be ok w Windows. This issue is hopeless and can only be fixed in future Linux kernels if AMD decides to cooperate or hopefully future iterations of AMD cards. Sleep/Wake doesn't work on neither the host nor the guest. Not an issue in Ubuntu, haven't debugged Proxmox or MacOS yet.

-

I have been running both the Zen 2 and General 5.7.8 versions of these for the past week. They won't solve the reset bug. As you know, the power on/off patch also doesn't work for MacOS VFIO and 5.7.8 is unlikely to change that (although I did not try to patch in 5.7.8). The problem with the reset issue is that AMD is unwilling to share details on how to bypass on current generation GPUs, but fingers crossed they will address that with next. The pve-edge-kernel should be buildable against 5.8 too, if you change the submodule repo. If there's any chance on solving the reset bug, 5.8 may have a slim one, but I'm doubtful. I built 5.7.8.1 myself, so if anyone's interested I could try to build against the 5.8 rc5 sha. Re: my earlier note on trying Big Sur on 3990x. For some reason I couldn't download it from Apple with my developer account. Does anyone know how to enroll in the beta? Since others are running 3970x (which is a perfectly fine CPU for this setup), here are my stock (I can't overclock as I run air cooling) 3990x Geekbench numbers under 5.44-1/2 mainline. Curiously, 5.7.8 would consistently score a 1000 points lower in multicore, but same or marginally better single core. Not sure what to make of it, but bottom line is don't expect 5.7.8 to improve performance for you VM CPU-wise. Unless you have an issue with your current hardware, I wouldn't recommend it. https://browser.geekbench.com/v5/cpu/2974875

-

I have the 3990x/GB Designare TRX40 running a very similar setup to what's in this thread (Proxmox hosted VM + OpenCore), but I am still on Catalina 10.15.5. I used different resources to get it running (Nick Sherlock's excellent guide and self-tutoring myself on Proxmox and pass-through). I registered recently as this thread has some good info, so my 1st post is in response to your question. To your question: I still haven't tried Big Sur as I have a pretty stable and working build now, but I see beta 2 is available, and resources are becoming more available on how to get it up, so I will try it in the next few days and inform you on the max CPU/core limit. I boot 64 cores in Catalina, but never tried 128. For multi-core loads, MacOS will definitely benefit from more physical vs logical cores even at the lower clock of the 3990x, but you will see very sub-linear scaling beyond that for most real-life loads. I don't have many benchmark data points beyond compiling Proxmox's pve-kernel (under Linux) and geek-benching under Linux and MacOS alike. For reference, multi-core geekbench 5 of the 3990x at stock is in the 27200-27500 range in MacOS vs 37000 in latest stable linux kernel (5.7.x) and maybe around 35000 on earlier LTS kernels. You can already see the decreasing benefit. The 3970x is a pretty sweet deal for its price.